Invalid input. Special characters are not supported.

As AI systems scale in complexity and capability, the demands placed on storage infrastructure are evolving just as rapidly. From training massive models to serving real-time inference, storage is no longer a passive back end, it’s a performance-critical component of the AI stack. The MLPerf Storage v2.0 benchmark suite offers a fresh lens into how modern SSDs handle the unique I/O patterns of AI workloads, from high-throughput training to fault-tolerant checkpointing.

But the story doesn’t end there. As inference workloads shift toward retrieval-augmented generation (RAG), vector databases and key-value (KV) caches are emerging as the next frontier in storage optimization. These workloads introduce new challenges.

In this post, I’ll walk through the latest MLPerf Storage results, unpack the workloads behind them and explore how future benchmarks will need to adapt to the rise of vector search and KV cache reuse.

If you’d like to know more, come see me at SNIA Developer Conference (SDC) where I’ll be doing an in-depth look in my session on Sept. 17, 8:30 a.m.: Discussion and analysis of the MLPerf Storage benchmark suite and AI storage workloads

MLPerf Storage v2.0s: Key results and workloads

Benchmarking storage for AI workloads is notoriously difficult. Real training jobs require expensive accelerators, and publicly available datasets are often too small to reflect production-scale behavior.

MLPerf Storage, developed by the MLCommons consortium, fills this gap. It emulates realistic AI training workloads using synthetic datasets and real data loaders (PyTorch, TensorFlow, DALI, etc.), while replacing compute with calibrated sleep intervals to simulate GPU behavior. This approach enables accurate, scalable benchmarking of storage systems without needing thousands of GPUs.

Micron has been deeply involved in MLPerf Storage since its inception and has contributed to every major release — v0.5, v1.0, and now v2.0 — submitting results and helping shape the benchmark workloads.

With v2.0, MLPerf Storage expands beyond training ingest to include checkpointing workloads.

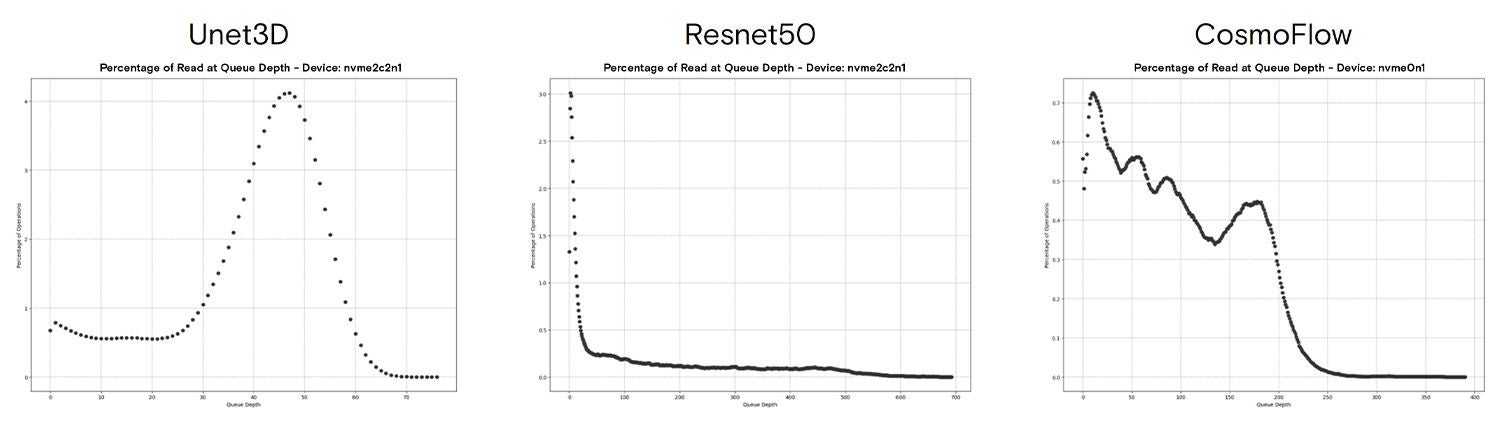

Training workloads: ResNet50, CosmoFlow, Unet3D

I’ve written and spoken in depth about the training workloads in MLPerf Storage, most recently at FMS 2024. The training benchmark definitions in the v2.0 publication are the same as the v1.0 publication, which means we can compare results across the two submission periods.

I highly recommend reviewing that talk but the main takeaway is that training ingest can represent a broad range of workloads. For the three models represented in MLPerf Storage, we see a range of transfer sizes depending on the data container format (TFRecord results in a large number of 4KB IOs).

These characteristics result in data ingest for AI training being significantly more complex than we would expect.

Checkpointing workloads: Llama 3 herd of models

We began working on the checkpointing workload in late 2023 and are extremely proud to see our work get included in MLPerf Storage. Checkpointing is now a first-class workload and it addresses the need to periodically save model and optimizer states during training, especially for large language models (LLMs) running on scale-out clusters. For a deeper dive into the benchmark, check out my blog at mlcommons.org announcing the new checkpointing workload.

The benchmark includes four model sizes, resulting in checkpoints ranging from 105 GB to 18,000 GB. For each model, we measure two phases of checkpointing: saving the checkpoint and loading the checkpoint.

The results for checkpointing show a variety of solutions for efficiently storing checkpoints and recovering from a previous checkpoint. Huge shoutout to IBM, which achieved a recovery speed over 600 GiB/s for the 1 trillion parameter model!

Emerging workloads: Vector databases

Vector databases are optimized for storing and searching high-dimensional vectors — typically embeddings generated by AI models. These systems power similarity search in applications like recommendation engines, image and text retrieval, fraud detection and increasingly, retrieval-augmented generation (RAG) for LLMs.

Unlike traditional databases, vector DBs prioritize approximate nearest neighbor (ANN) search over exact matches, trading off precision for speed and scalability. As datasets grow year-over-year, the need to offload vector indexes from memory to disk has made storage performance a first-class concern.

While there are multiple ways to integrate storage into a vector search system, a primary method is to use an index designed to query from fast storage. My teammate Sayali Shirode recently presented some of her findings at FMS (Future of Memory and Storage) in her session Discussion and analysis of new vector database benchmark in MLPerf Storage.

In this session, Sayali showed the analysis of using DiskANN in Milvus with a dataset of 100 million vectors. The key findings of this work that dictate storage decisions are:

- Small IOs

- Low queue depth with bursts of very high QD

Sayali showed that the latency-sensitive domain (low batch size, single process) performs 100% 4KB operations to the index on the disk. Additionally, when scaled up to max throughput (high batch size and multiple simultaneous processes), we saw the same behavior, 100% 4KB operations to the index on disk.

The second finding builds on the first. When we look at the histogram of queue depths for the workload, we see that the majority of IOs are inserted into the queue at a relatively low position. The single process peaked at QD7 with a tail to QD35. The 64-process test showed a similar peak at a “low” queue depth of 15 but had a long tail out to QD 400+.

Scaling the number of threads or batch size higher than tested resulted in huge increases in latency with minimal increases in query throughput. These together show that storage requirements for DiskANN are to provide many 4KB IOs while minimizing latency and optimizing QoS.

Would you like to know more?

For more information on vector search and DiskANN, check out Alessandro Concalves’ (Solidigm) presentation at SDC, Scaling RAG with NVMe: DiskANN’s hybrid approach to vector databases indexing. Alessandro has been working on vector database benchmarking with the MLPerf Storage working group since we began the initiative earlier this year. We also have a benchmarking tool that can generate synthetic datasets and queries. It allows us to test scaled-up datasets, but does not verify the accuracy of results, it should not be used to compare different indexes against each other without some additional accuracy testing. The tool is under active development, so use the github issues for requests or bugs.

KV cache management & reuse

KV caching is a complex topic that suffers from poor naming. The important thing to know is that an LLM generating tokens needs a KV cache for the query. The KV cache is effectively the short-term memory of a transformer-based LLM during inference.

KV cache offload refers to using other memories to store parts of the KV cache while doing token generation. This is a different part of the workflow than we’re discussing here.

KV cache management and reuse refers to storing the KV caches of queries after token generation is complete for possible future reuse.

This can happen if you pick up a conversation with an LLM after leaving for a period of time or if other queries need to refer to the same “context” (successive queries from a coding assistant against the same code base or multiple questions about a long document). Loading the KV cache from disk can be quicker than recomputing it and frees compute resources for generating more output tokens.

The industry is still developing this workflow, with significant research and development focused on optimizing these processes. The MLPerf Storage Working Group (among others) is working to develop and define a benchmarking process to represent the KV cache management layer and how it will interact with storage.

Our initial analysis shows that the workload will be primarily large transfers (2MB+) with high write throughput rates, as we generally see in caching systems. In high-throughput GenAI systems, the KV cache generation rate can be tens of terabytes per GPU per day and higher.

This drives storage requirements that focus on optimizing the endurance of the underlying system (over provisioning, SLC versus TLC versus QLC, flexible data placement, etc).

Would you like to know more?

For more information on using storage for KV Caches, check out Ugur Kaynar’s (Dell) presentation at SDC, KV-cache storage offloading for efficient inference in LLMs. Ugur has also been contributing to the MLPerf Storage Working Group across multiple domains for the v2.0 development cycle.

Please read my previous blog post discussing the specifics of KV Cache management, with some numbers that really put into perspective why KV cache is a big topic. 1 million token context: The good, the bad and the ugly.

The future of storage for AI systems

As we look to benchmark and characterize the storage for AI systems of today, the future is wide open for what we might need. If you’re interested in tracking the bleeding edge of storage for AI, check out the following sessions at SDC:

John Mazzie (Micron): Small granularity graph neural network training and the future of storage

John Groves (Micron): Famfs: Get ready for big pools of disaggregated shared memory

CJ Newburn & Wen-Mei Hwu (Nvidia): Storage implications for the new generation of AI applications